If your website is not indexed by search engines then you cannot show up for any search queries. Search engines include web pages in their index once they crawl the web and discover new pages. Web spider is used to carry out the crawling process at scale and the web spider of Google is 'Googlebot'. When you use Google to find something, you are asking Google to return all relevant web pages from its vast database. It is the ranking algorithm of Google search engine that sort the web pages in the best possible way for you to observe the most relevant results on top.

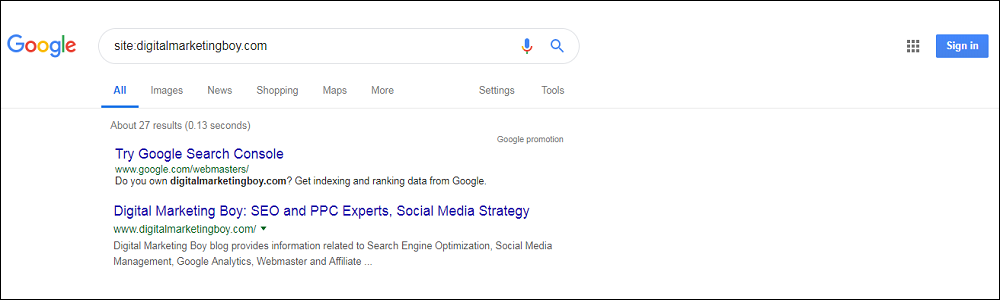

Digital Marketing Boy suggests you index your web pages first and then go for ranking the same. The best way to check the indexing of your website is to visit the Google search engine and search for site:yourdomain.com. The web page will not show up in search results if it is not indexed by Google. You can also use one of the powerful SEO tools 'Google Search Console' to analyze the detailed information about the indexing of your web pages. Just go to: Google Search Console > Coverage and observe the valid or excluded web pages. URL Inspection in Google Search Console also helps you to monitor the indexing status of web pages.

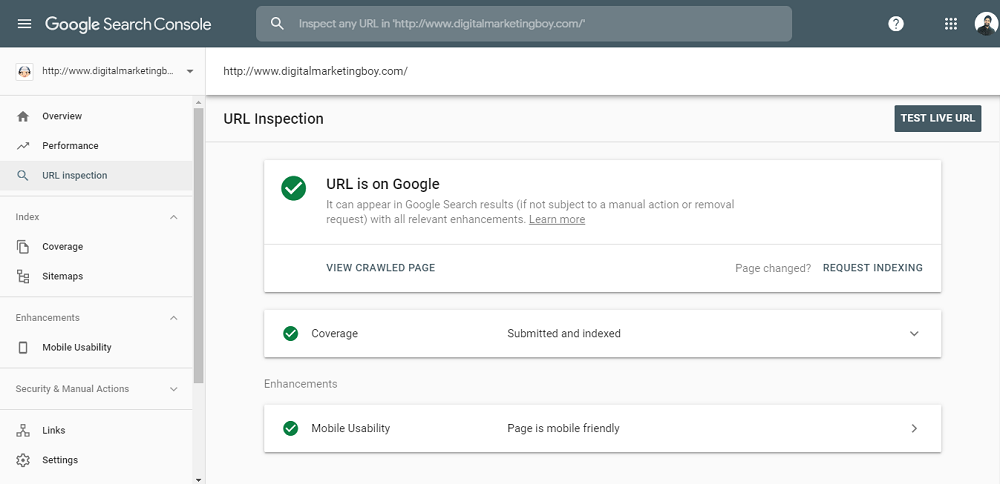

Consider you analyzed that your website or web page is not indexed in Google search engine. Access the Google Search Console, click URL Inspection and enter your web page URL into the search bar for indexing. Google will check the status of your URL and you should click on 'Request Indexing' text to complete the process. This is a very good practice as it informs Google that you have added a new web page to your website and Google should take a look at it.

Now we will discuss the issues that prevent Google from indexing web pages:

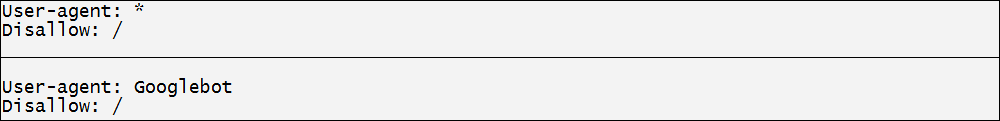

A. Analyze Robots.txt: It is possible that search engine crawling is blocked in Robots.txt file. Digital Marketing Boy would like you to access the root directory of your server and view Robots.txt file. The following codes indicate that your web pages are not allowed to get crawled by Googlebot. You can fix this issue by just removing the codes from Robots.txt file.

B. Analysis of Orphan Pages: The web pages without any inner web pages linking to them are termed as 'Orphan Pages'. Download a full list of pages from your server and check for Orphan pages. If an orphan web page is not important then delete it from the server otherwise include it in the website structure.

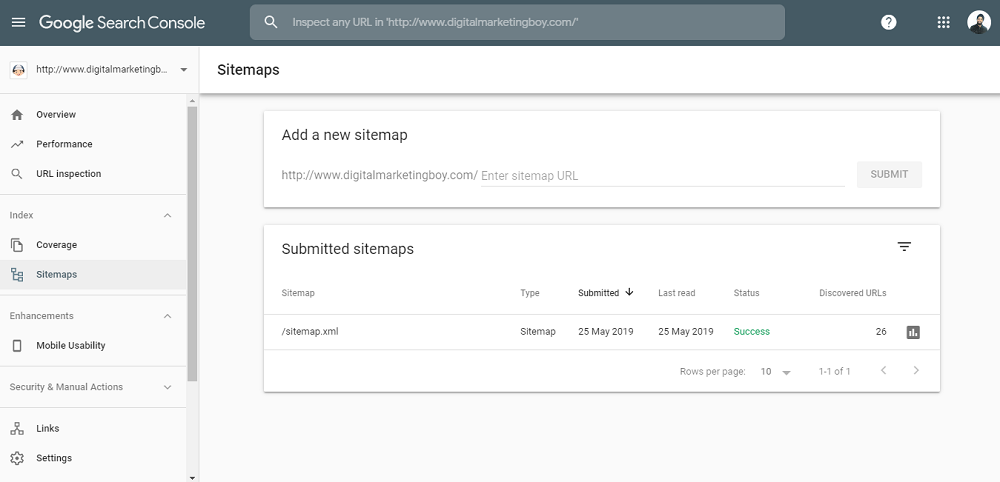

C. XML Sitemap: Adding your web pages in XML Sitemap is considered a good practice. You can analyze the existence of your web pages in XML Sitemap by accessing Google Search Console or root directory (yourwebsite.com/sitemap.xml) of your server. Be sure that you add all your web pages in XML Sitemap and inform Google about the same by submitting your XML Sitemap in Google Search Console > Index > Sitemaps.

D. Analysis of Canonical Tags: This tag is one of the SEO terms and you can use URL Inspection of Google Search Console to check the status of canonical tag on a web page. If you want to index page A and its canonical points to page B then you should fix this issue immediately.

E. Check X-Robot and Meta Tags: Search engines will not index a web page if it includes 'noindex' value in either 'X-Robots-Tag' http header, Robots Meta or Googlebot Meta tags. You can fix this issue by simply removing 'noindex' value from tags.

F. Nofollow Tag: It is better to remove Nofollow tag from internal links in your website because Google does not crawl Nofollow links.

G. Powerful Internal Links: If you want Google search engine to index your new web page immediately then link it from your powerful pages. This is because Google recrawls powerful pages much faster as compared to less authoritative web pages.

H. High Quality Pages: Make sure your each web page is of high quality because low-quality pages do not hold any value for its users. Improve the content of your web pages and fix all the technical issues.

I. High Quality Backlinks: Google identifies the importance of your web pages with the help of backlinks. Web pages with high quality backlinks are likely to crawl and recrawl by Google search engine.

It is not that your web pages will start ranking once they get indexed in search engines. Indexing is just to notify Google about your website. Your SEO expert should identify search queries used by your consumers, provide content suggestions, optimize web pages for your targeted keywords, implement schema markup and build backlinks regularly. The reasons why Google is not indexing your web page are technical issues and low quality pages. Go through the above points if you are facing any indexation issues.

We have built an interactive checklist to help you handle your website migration.

Know the importance of 301 Redirects in an SEO strategy.